Consider: What is dating apps were use dby bind epopel

| Comedian guys dating passive | Are dating apps an enderment to women |

| BEST COUPLE DATING APPS | |

| Big boob girl on adult dating site | Clover dating app free |

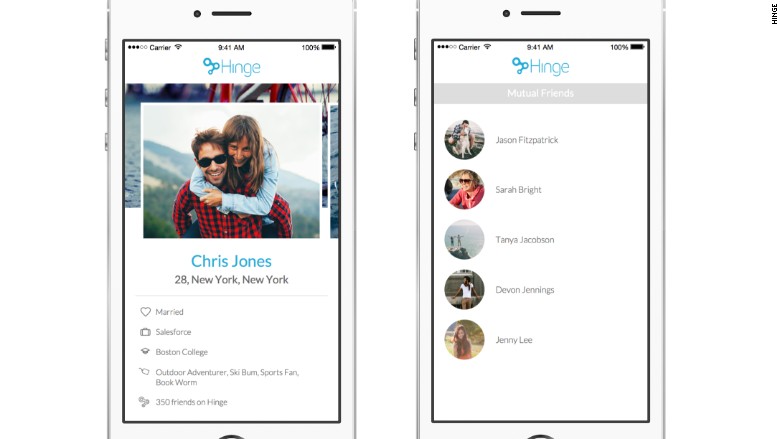

Imagine navigating the already crazy world of Tinder or Bumble without being able to see the individuals. For anyone who is unfamiliar with a screen reader’s output, here’s what it might sound like:

So anyone have any idea what Chad looks like, what he likes, or if you want to swipe left or right on him? Nope, didn’t think so.

As a sighted individual, I never really thought about the issues that an individual with a visual impairment might have in the mobile dating realm. It took my blind friend sitting at my desk and passing his phone to me for a verbal description of the individual in the profile for me to realize how terrible of a user experience it is to use a dating platform with a screenreader.

I halfway joked to my friend that in order to get him to leave me alone so that I could get back to work, I was going to write a program that would automatically caption the photos of the profile for him. We laughed about it for a few seconds, and then we remembered that we were both computer science Ph.D. students and could actually relatively easily accomplish this. It wouldn’t be too hard to have him screenshot a profile, send the image to a server, do some computer vision magic, and Viola!, we could send back a caption that could give a little more insight as to who was actually in the profile.

This idea conveniently came near the beginning of a new semester where I was enrolled in several machine learning courses, so I proposed to one of my groups that we do this project for a semester project. They loved the idea.

To start with, we decided to do a survey of college students to see what kind of information people look for in a dating profile. For each category individuals were able to choose up to three options that were important to them in a tinder profile.

Asterisk: The way this survey was collected was very informal and it also deals with college student population in general, not the more specific subpopulation of individuals with visual impairments. Don’t take these graphs as thoroughly explored scientific results, rather a brief look into a young adult’s head while they evaluate a dating profile.

As expected, some patterns arose from the survey results. When evaluating a profile, the user found several characteristics about individual in the profile to be important. They generally wanted to know the hair color and length, whether the individual was smiling or not, if the photo was taken inside or outside, and the individual’s body shape.

With this being a course on statistical machine learning, the syllabus just barely got into deep neural networks, so we took a relatively naive approach to coming up with captions. Basically we split the task up into several binary classifiers (long/short hair, light/dark hair, indoor/outdoor, smiling/not smiling, etc) and each group member was responsible for one of the classifiers. The system that we came up with is demonstrated in the video below:

There is a definite lag once the screenshot is taken where we wait for the phone to pick up on the fact that there was a new screenshot added to the screenshot folder. As demonstrated, this system is still a prototype. There are still plenty of bugs to squash and things to improve, but I think our system does a great job of illustrating the proof of concept.

The clear next step here is to move away from naive classifiers, and instead use a deep neural network trained on image net to caption the images. I will update this post once that version is implemented and give some more thorough and explicit results, and maybe even some feedback from users with visual impairments.

So inevitably, some of you are asking the relatively insensitive, however not entirely irrelevant question of “Why the heck does it matter what the person looks like? He can’t see them anyway.” It does matter, I promise. So much more can be gleaned from an individual’s profile than their physical looks. You can gain information from the environment, Chad, for example is a outdoorsman who likes boats and dirt bikes and 4 wheelers, maybe that’s your thing, maybe it isn’t, but without being able to see the photos that information is inaccessible. You can also imply some aspects of an individual’s lifestyle from the photos in an individual’s profile. If the profile consists of five shirtless gym selfies, and you’re not particularly the gym-going type maybe this information will help you decide if the individual is someone worth swiping right on.

So I guess the moral of the story is that accessibility, sadly, is not something that designers generally think about when building technologies. This creates challenges for many people and creates barriers to the technological world. Computer vision has proven to be an incredible tool to use to tear down some of the barriers for individuals with visual impairments, however it shouldn’t have to come to that. In my opinion, one of the biggest problems in accessibility is just plain awareness. In my computer science classes accessibility has NEVER been mentioned. All it would take is fifteen minutes for a professor to introduce the concept of universal design (the idea that we should design things so that they are accessible to everyone).

-